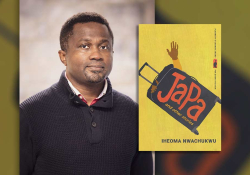

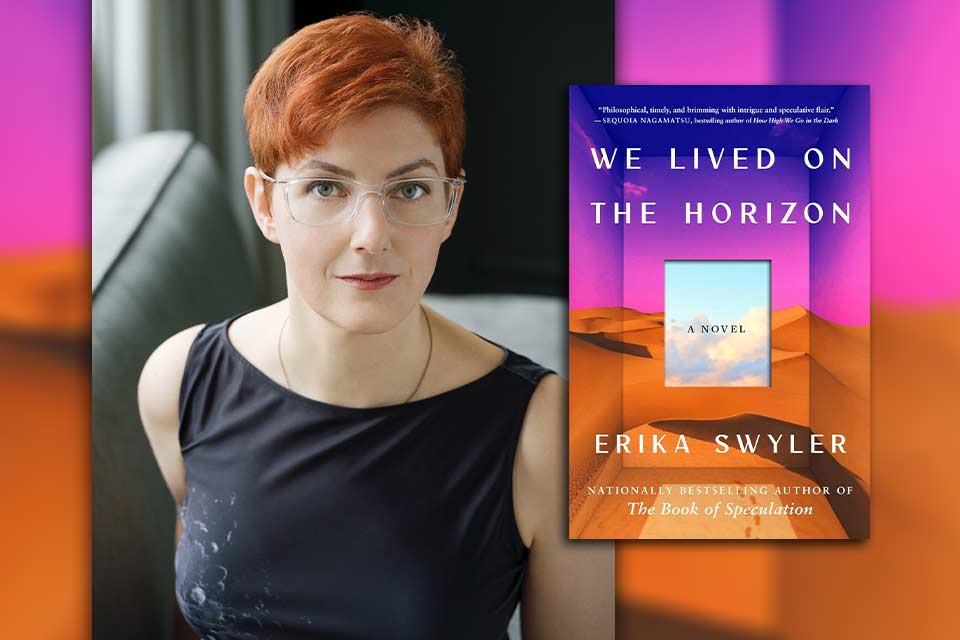

Writing against Classical Evil AI: A Conversation with Erika Swyler

Erika Swyler is the best-selling author of the critically acclaimed novels Light from Other Stars and The Book of Speculation. Her essays and short fiction have appeared in Catapult, Literary Hub, VIDA, the New York Times, and elsewhere. A graduate of NYU’s Tisch School of the Arts, she seeks to make work that is in dialogue with the arts, sciences, and history.

Erika Swyler is the best-selling author of the critically acclaimed novels Light from Other Stars and The Book of Speculation. Her essays and short fiction have appeared in Catapult, Literary Hub, VIDA, the New York Times, and elsewhere. A graduate of NYU’s Tisch School of the Arts, she seeks to make work that is in dialogue with the arts, sciences, and history.

Rita Chang-Eppig: Thanks for speaking with me today, Erika, and congratulations on We Lived on the Horizon. This is a novel of philosophical ideas but also a novel of lovable characters. There’s intellect and there’s heart, which is what I’ve come to associate with your work.

One of the main characters is Nix, an AI who has been confined to a humanoid body so they can better serve their human creator. Nix is probably my favorite character in the book. You do such a good job of making them feel simultaneously human and machine, such as the way they read emotions in color hexadecimal codes. Tell me a little about how you got into the head of a machine.

Erika Swyler: Nix was by far the hardest voice to find because they needed to feel not human but relatable, and the voice had to change over the course of the novel because they’re undergoing a significant transformation. A thing that irked me in a lot of fiction was how seamlessly people depict AI functioning inside a body. That seems like it would be traumatic, going from being a consciousness that’s a cloud of machine processes to suddenly having all this sensory input that you have no basis to understand. I wanted to focus on everything that could possibly go wrong with the human body for someone who’s never encountered it. Too much emotion, too limiting. What is pain? To have that processing in an infantile way but with more sophisticated thought.

I also don’t see as much fiction as I’d like that approaches machine thinking from a point of view different from human thinking. With machines, there are lots of different hubs, networks, silos, and servers, where you process and store data. That’s not how the human brain works at all. I wanted to play with plural consciousness and how the “they” pronoun means literally all parts of Nix—and what it would be like to be slowly cut off from yourself. I become instantly sympathetic to any character going through that kind of trauma and feeling baffled by it.

Chang-Eppig: I grew up watching anime like Ghost in the Shell, and I noticed some of those themes in your writing, themes like plural consciousness. I also sensed a bit of Octavia Butler in the way that you talked about future dystopias. I’m curious: When you were drafting this novel, what kinds of sources did you use? What influences were on your mind?

Swyler: Butler came to mind for me for the community-driven nature of things. I find her work is really focused on how we continue, how we go forward, and who we rely on. Politically, I think it’s difficult to avoid being influenced by Le Guin. The Dispossessed and The Left Hand of Darkness were major driving factors for me.

If you read enough Asimov as someone who is not a cis white male, there are lots of things you wind up wanting to write against.

I tried to not read too much of what everybody was doing currently because I’m easily intimidated by other people’s work. I’ll think, “Oh, well, they’ve done it better than I possibly could, so I should stop.” Autonomy was my early working title, and then I read Annalee Newitz’s Autonomous and nearly got derailed. I had to go back and read what I read as a kid that made me love sci-fi in the first place and figure out what I wanted to take from it and what I wanted to write against. How did I want to write knowing that there are Asimov’s laws of robotics that so many writers work from, whether we mean to or not? It’s difficult to write about AI and robots and whatnot without reading Asimov and Philip K. Dick. If you read enough Asimov as someone who is not a cis white male, there are lots of things you wind up wanting to write against.

Chang-Eppig: Speaking of the laws of robotics, one of the things you’ve said is that you really wanted to avoid the trope of the evil robot trying to take over the world. Obviously, anyone who has read Asimov knows the laws: You can’t harm a human. You can’t—I forget the exact order—you can’t allow harm to come to a human through inaction, etc. At the same time, you’re writing in a media environment that’s full of images like Skynet and the Matrix. Why was it important for you to portray something different, even though that’s the selling narrative right now in the US?

Swyler: Something that bothers me with conversations around AI is when people use mental illness terminology to describe machines and programs. Most of the things that we’re calling mental illness are how those programs are designed to function. When we say AI is hallucinating, like when ChatGPT makes up things that don’t exist—that’s not a hallucination. That’s what it’s supposed to do. It’s supposed to make something that could sort of pass for fact or human creation.

Something that bothers me with conversations around AI is when people use mental illness terminology to describe machines and programs.

HAL 9000 in the Arthur C. Clarke books is not insane, though it’s portrayed that way. Across science fiction, a lot of evil robots, “insane” robots, come from when humans give the machines commands that are conflicting or that they can’t possibly do. Or the machine functions as it’s supposed to, and the “evil” is actually something that humans programmed it to do.

I really like to write against Harlan Ellison’s “I Have No Mouth, and I Must Scream.” It’s classic “evil AI.” But again, what has happened is that it’s reached the end of a poorly thought-out human command. It achieved its purpose, so now what does it do? I wanted to take that apart and think about what tech might do when you try to design it to be human-forward, for the good of people.

Chang-Eppig: That’s a very good point that you’re making. In many ways, these “evil robots” are functioning the way they were built, and it’s unfair to attribute intentionality or malice to what they’re trying to do. In your ideal world, what would discussions of AI look like?

Swyler: What we’re calling “AI” right now, we talk about mostly in the realm of the humanities and the arts. Unfortunately, that’s what’s getting a lot of attention in the public, and those are the areas in which it’s least effective. It also involves a lot of theft. For example, my books were scraped. I’m not thrilled about that. But there are areas where we can use AI in ways that don’t affect people’s jobs, that offer possibilities, and that can be scaled down in a way that’s less impactful on the environment, which has to be everyone’s number-one concern.

I think people-forward AI has to be about things like positing molecules for drug development that can then be tested and trialed to speed up processes. That’s already happening. I want to think about how we use this for better public health. How do we use this to recognize patterns of inequality? It has to be much smaller and much more focused on areas where we actually need help as opposed to the arts and writing emails.

Chang-Eppig: Of course, one of the tricks to people-driven AI is that you have to build it using people who are actually thinking about other people, about the greater good.

Swyler: Yep.

Chang-Eppig: Which brings me to my next question: I remember when this book first sold, you made a joke on social media about how you had finally written a timely book. You were talking about AI, and the book is still timely in that regard. But then the shooting of the UnitedHealthcare CEO happened, and when I saw just how many people online were celebrating the murder because they’d lost loved ones to insurance companies’ denials or because they were in medical debt, my first thought was, Wow, Erika has written a timely book in more ways than one. Because this book is also about revolution. It can be read as a story about people with generational debt rising up against people with generational wealth. But you were very evenhanded in how you wrote about revolution, how it can be both necessary and violent. Tell me about your thought process while writing about this topic and what you want readers to understand.

Swyler: In a lot of ways, this book is about revolution and what uprisings sometimes look like. I wanted to explore how we make groups of people into monoliths. It’s popular discourse right now, especially online, to say things like “Eat the rich.” That’s fun enough to type and casually joke about, but there’s no such thing as a bloodless revolution. I want people to understand what things look like. People will die. Is that acceptable?

There’s no such thing as a bloodless revolution. People will die. Is that acceptable?

I personally don’t have sympathy for billionaires, but I wanted to also ask if it’s possible to grow up in the shelter of enormous wealth and still be a human being who cares deeply about other people outside of that sheltered world.

I did get a bunch of texts the morning of December 4 saying, Oh my God, your book . . . That made my stomach flip a little bit. But when you read history, you see this isn’t an uncommon occurrence. It just doesn’t get written about as much as it should because those in power keep things quiet for obvious reasons.

Chang-Eppig: Right. Your descriptions of the revolution reminded me a little bit of my grandparents’ stories about escaping China in 1949. There are a lot of people who believe that everyone who died during the Communist Revolution deserved it because they were these evil elites, and sure, many of them were. But some of them were people who just got caught in the crossfire because they were perceived as being part of the elite class.

Swyler: That’s the thing. I don’t think people need to be sympathetic to billionaires. But I think when you start saying things like “Eat the rich,” “rich” quickly becomes a difficult category to define. If you have one cow, the guy who has two is wealthy. It’s dangerous to see only groups, not people. And there is no good answer. That’s being alive. It’s wandering through these muddy areas and trying to do the best you can for the most people you can while also staying alive.

Chang-Eppig: Yeah, let’s talk about doing good. Another interesting theme you explore is the concept of altruism. What motivates people to altruistic acts, and is it really altruism when there’s a personal benefit? For example, we have these Body Martyrs who literally donate parts of themselves to others, and this leads to a great decrease to a life debt that they would otherwise never work off. I think many people would argue that that’s not altruism, it’s coercion, even if the martyrs believe it to be altruism. What made you want to explore ideas around altruism and “charity”? How do you see altruism as playing into the decisions of different characters, especially a nonhuman character like Nix?

Swyler: My first inkling came from a story I was listening to on NPR about people who donate kidneys to strangers. It’s not via request. They go in and say, “I want to donate an organ.” That’s a form of extreme altruism, but it has been present throughout history.

When you study societies, you start to see there are always people who are motivated to do good for the group, sometimes at a huge personal cost. That’s part of how we as a species stay alive. I started to think about it in terms of governments and exploitation. Governments, especially some that we’ve had recently, rely on altruists to keep things going. These governments say, “We will cancel X, Y, and Z because you’ll figure out a way to carry on through mutual aid.” They assume that will happen. If it didn’t, the entire population would go away and there’d be no one to have power over. The assumption that citizens will save one another is baked into society.

I wanted to see what altruism looked like from different class perspectives and also from the perspective of a nonhuman. I think it’s hilarious how many tech folks who are so interested in pushing AI forward claim to be “effective altruists.” That’s a version of altruism that’s gotten so convoluted it’s become altruism’s opposite. If you look at data points for what’s best for societies, the data that any machine consciousness would look at, the billionaire class shouldn’t exist.

I think it’s hilarious how many tech folks who are so interested in pushing AI forward claim to be “effective altruists.”

Chang-Eppig: This reminds me of a thing I read that said the necessity of charity indicates a broken system. In a well-functioning system, people would get their basic needs met, and there wouldn’t be such a need. So the fact that we do need people to donate tons of money and resources is a condemnation of our society.

Swyler: Exactly.

Chang-Eppig: What are you working on next?

Swyler: I’ve been trying to find a good way to describe this because it’s an odd one. It’s about a dying diplomat and her daughters, and a train trip to an underground city where the diplomat’s heart will be kept alive. So, aging and moving forward, but to what?

December 2024